What is Face Recognition - Dlib?

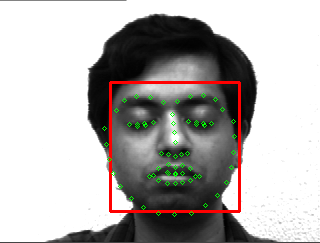

Feature point values are extracted once more from the faces detected through face detection.

Normalize these feature point values to find the face with the shortest distance, and if it is smaller than the threshold, use it as the predicted value.

n my implementation, Dlib is more effective than LBPH

Example Code

Detecting Face

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

face_detector = dlib.get_frontal_face_detector()

points_detector = dlib.shape_predictor('shape_predictor_68_face_landmarks.dat')

face_descriptor_extractor = dlib.face_recognition_model_v1('dlib_face_recognition_resnet_model_v1.dat')

index = {}

idx = 0

face_descriptors = None # Will be numpy array that contains image features.

paths = [os.path.join('yalefaces/train', f) for f in os.listdir('yalefaces/train')]

for path in paths:

image = Image.open(path).convert('RGB')

image_np = np.array(image, 'uint8')

face_detection = face_detector(image_np, 1)

for face in face_detection:

l, t, r, b = face.left(), face.top(), face.right(), face.bottom()

cv2.rectangle(image_np, (l, t), (r, b), (0, 0, 255), 2)

points = points_detector(image_np, face)

for point in points.parts():

cv2.circle(image_np, (point.x, point.y), 2, (0, 255, 0), 1)

face_descriptor = face_descriptor_extractor.compute_face_descriptor(image_np, points)

face_descriptor = [f for f in face_descriptor] # represent information of each faces.

face_descriptor = np.asarray(face_descriptor, dtype=np.float64)

face_descriptor = face_descriptor[np.newaxis, :]

if face_descriptors is None:

face_descriptors = face_descriptor

else:

face_descriptors = np.concatenate((face_descriptors, face_descriptor), axis=0)

index[idx] = path

idx += 1

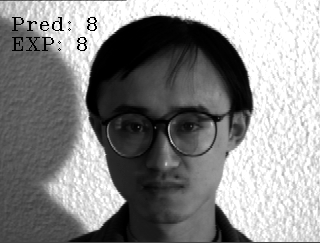

The result of the code returns an image that represents the predicted result and expected result, as shown in the image below.

Recognizing Face

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

threshold = 0.5

predictions = []

expected = []

paths = [os.path.join('yalefaces/test', f) for f in os.listdir('yalefaces/test')]

for path in paths:

image = Image.open(path).convert('RGB')

image_np = np.array(image, 'uint8')

face_detection = face_detector(image_np, 1)

for face in face_detection:

points = points_detector(image_np, face)

face_descriptor = face_descriptor_extractor.compute_face_descriptor(image_np, points)

face_descriptor = [f for f in face_descriptor]

face_descriptor = np.asarray(face_descriptor, dtype=np.float64)

face_descriptor = face_descriptor[np.newaxis, :]

distances = np.linalg.norm(face_descriptor - face_descriptors, axis=1)

min_index = np.argmin(distances)

min_distance = distances[min_index]

if min_distance <= threshold:

name_pred = int(os.path.split(index[min_index])[1].split('.')[0].replace('subject', ''))

else:

name_pred = 'Not identified'

name_real = int(os.path.split(path)[1].split('.')[0].replace('subject', ''))

predictions.append(name_pred)

expected.append(name_real)

cv2.putText(image_np, 'Pred: ' + str(name_pred), (10, 30), cv2.FONT_HERSHEY_COMPLEX_SMALL, 1, (0, 0, 0))

cv2.putText(image_np, 'EXP: ' + str(name_real), (10, 50), cv2.FONT_HERSHEY_COMPLEX_SMALL, 1, (0, 0, 0))

cv2_imshow(image_np)

he result of the code returns an image that represents the predicted result and expected result, as shown in the image below.